In Part 2 I looked at Garbage Collection and the part that it plays in Flash Storage, and Wear Levelling is a key link between the two processes.

Wear Levelling is the process by which the drive itself (in an individual SSD) or the operating system of the array manages the write process as data is committed to the cells. The overall purpose of Wear Levelling is to ensure that the Write Cliff is never reached by ensuring that the data is spread evenly over the cells in the array over the lifespan of the array.

Wear Levelling can be managed in 2 forms, Static and Dynamic.

Static

Static Wear Levelling can be best described as the process of moving immovable cells. Operating systems will create static blocks which it commits to disk, and manages the location of, through the LBA (Logical Block Address) Table. Since these blocks are seldom re-written, they tend to hit a cell once and stay there, effectively holding the wear count at a low level. This isn’t a problem, so much as it is hugely inefficient and it will dramatically shorten the life of your array because you are reducing the number of cells that can be written to.

Static Wear Levelling references the LBA Table hunting for static blocks and moves them to cells with higher wear counts, effectively freeing up the cell with a lower count into the write lifecycle of the array.

Dynamic

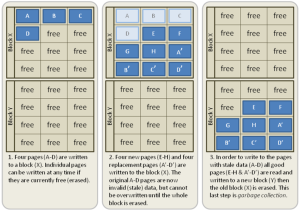

Dynamic Wear Levelling is the process whereby rapidly changing data is updated in the LBA Table as invalid and subsequently written to a different cell to which it was originally assigned. The intelligence comes in arrays that manage this dynamic process by retaining information on the cell write count for the drives in the array. Naturally, it is impossible to retain the count on all cells, so a representative sample is maintained.

How Does It Affect Me?

This process is integral for extending and maintaining the life of your array. Put in mathematical terms, it can be displayed below.

Assuming that an MLC drive will have a lifespan of 10,000 write/erase cycles, and there are 81,920 blocks of 2MB each in a 160GB SSD Drive

160GB x 1024 = 163,840MB

163,840 / 2MB = 81,920 Blocks

Approximately 20% of an unmanaged SSD drive is reserved for systems management, buffer space for wear levelling and garbage collection and other system processes, so factoring that in, we have 65,536 Blocks available for the write process.

If we take those 65,536 Blocks and work on the basis of the write process being 15% efficient and that we write to each individual block twice every second then we are left with the following calculation.

65,536 Blocks x 10,000 Writes x 15% Efficiency = 98,304,000 Total Writes

98,304,000 / (2x 60 x 60 x 24 x 365) = 1.56 Years

Which is fairly short term in the scheme of IT purchases. I have seen evidence of this, and heard plenty of stories in the wild of this happening, and the figure of 10,000 writes depends on your drive lithography and manufacturer, as all drives are certainly not created equal.

If we then compare this to a fully managed array, and assuming that the wear levelling efficiency isn’t perfect and operates at 80% we see the calculation change

65,536 Blocks x 10,000 Writes x 80% Efficiency = 524,288,000 Total Writes

524,288,000 / (2x 60 x 60 x 24 x 365) = 8.31 Years

This is largely down to the fact that you are using software to manage this process, and also the compute power in an array is far far in excess of that of a single, unmanaged SSD drive. When you combine other software managed aspects like Block Alignment and TRIM (The subject of Part 4), then you will find that the lifespan of your SSD array will far exceed 8.31 years.